In the flesh: translating 2D scans into 3D prints

Peeking at the algorithms which are informing patients and aiding surgeons

“The patient had been told that their knee looked like a smashed eggshell,” says Niall Haslam, EMBL alumnus and Chief Technology Officer (CTO) at axial3D – a medical 3D printing company. “But they were still planning to ride a downhill mountain bike race the following month.” For both Haslam and the clinicians involved, it was clear that the patient hadn’t fully understood the surgical situation or its implications. The hospital scans that they’d seen had provided detailed information about the bones inside his leg. But sometimes only a physical object can help someone understand the reality of a physical problem. “Only when they held the 3D printed shards did they grasp the enormity of the situation,” says Haslam.

Bringing information within reach

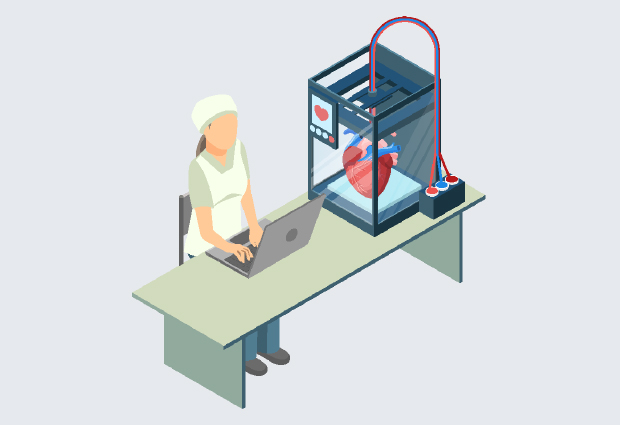

Since the early 2010s, the business of 3D printing has grown rapidly. Its versatility means that anything from innovative lab tools to educational knick-knacks can be produced relatively quickly and cheaply. Medical 3D printing is also on the rise and, at axial3D, life-size anatomical models can be produced within 48 hours. Haslam’s experience at EMBL – as a former postdoc in the Gibson team – has helped axial3D to grow from a start-up into a successful business. At EMBL, he learned how to usefully bring together large amounts of data onto one website so that researchers could design better antibodies. Now, at axial3D, he uses these skills to handle the digital side of printing 3D anatomical models. This gives both clinicians and patients the chance to see, hold and truly understand what is going on underneath the skin.

The production process for such a model appears simple. First, clinicians log in to axial3D’s website to upload a 2D scan. Within two days this is translated into a 3D printed object, ready to dispatch by post. In between these steps, however, finely crafted algorithms – written by Haslam and his team – run the show.

Creating works of art

For the patient’s shattered knee, a machine learning algorithm identifies the bone in each scan image by drawing an outline around it. The identified regions are then manually checked and the algorithm is told what it did well, and what it failed at. This allows it to learn and make better decisions next time. In this respect, machine learning algorithms are similar to humans: practice makes perfect.

A 3D digital representation of the bone then needs to be created. Just as Michelangelo chiselled David out of a marble block, another computer program chips away at a virtual cuboid, uncovering the sections of bone that were identified within. At this point, more human interaction is needed to file away inconsistencies and neaten up the edges before the final, physical sculpture can be created. Instructed by the digital file, the 3D printer zips around, systematically laying down a scaffold of plastic threads until the shape of the bone becomes recognisable.

Although the underlying algorithms are complex, it was important for Haslam and his team that the online platform remain simple. “It doesn’t matter if you’ve written the most powerful algorithm available,” says Haslam. “If no one can work out how to use it, it may as well not exist.”

Speaking the same language

Making complicated information accessible is a goal that Haslam shares with the doctors who use axial3D to explain complex surgical concepts to their patients. Hospital scans can look more like an abstract painting than the neat diagrams often used to depict human anatomy inside textbooks. A soft organ, such as the heart, is particularly prone to these abstractions because it’s also squeezed between the lungs and chest muscles, which can slightly change its shape. Coupled with unexpected deformities – caused by developmental diseases in children, for example – and the heart’s expected shape might be very different.

This is one reason why children’s cardiac surgeons are using 3D printed models of the heart to explain to parents which parts of their child’s heart haven’t developed properly – and how surgery can help. By further informing parents, both parties have a shared level of understanding and parents can meaningfully question the surgeon performing the operation. Importantly, the risk of a parent delaying or even refusing to give consent for life-saving surgery is also decreased.

“Even if you’ve written the most powerful algorithm available, if no one can work out how to use it, it may as well not exist”

Surgeons can also benefit from these models by using them to help plan surgical procedures and avoid possible complications. Important decisions about where within an organ to cut can be more easily taken before the first incision is made. Fewer complications can mean that patients need to spend less time under general anaesthetic. Even from a pragmatic point of view, avoiding unexpected increases in surgery times can also prevent cancellations later in the day or overtime for surgical staff.

3D printing of anatomical models is providing solutions to real-world problems. To do this, it brings together people with very different backgrounds, including patients, doctors and computer scientists. Yet despite the differences in people’s background knowledge and specialisms, everyone needs to be able to communicate effectively with one another. “If I say one thing but you understand another, then we can’t usefully move forward together with an idea,” says Haslam. “This is true, whether you’re a patient speaking with a surgeon, or a doctor ordering a 3D print from our website. We need to be speaking the same language.”